Gradient exceptionality in Maximum Entropy grammar with lexically specific constraints

Abstract

The number of exceptions to a phonological generalization appears to gradiently affect its productivity. Generalizations with relatively few exceptions are relatively productive, as measured in tendencies to regularization, nonce word productions, and other psycholinguistic tasks. Gradient productivity has been previously modeled with probabilistic grammars, including Maximum Entropy Grammar, but they often fail to capture the fixed pronunciations of the existing words in a language, as opposed to nonce words. Lexically specific constraints allow existing words to be produced faithfully, at the same time as permitting variation in novel words that are not subject to those constraints. When each word has its own lexically specific version of a constraint, an inverse correlation between the number of exceptions and the degree of productivity is straightforwardly predicted.

Documents:

Paper in Catalan Journal of Linguistics 15 (2016)

Slides from the Exceptionality Workshop associated with the 12th Old World Conference on Phonology (OCP) in Barcelona, January 2015

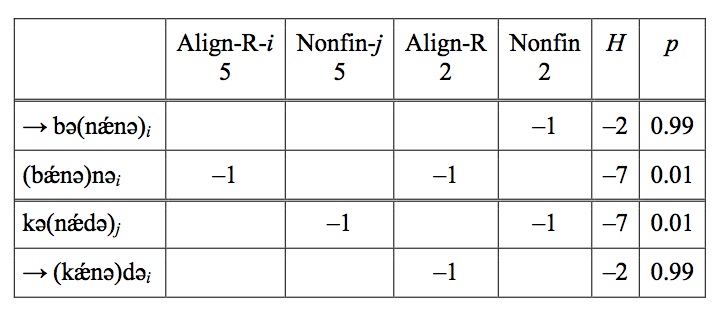

Sample tableau with lexically specific constraints